Algorithmic trading is a method of executing a large order (too large to fill all at once) using automated pre-programmed trading instructions accounting for variables such as time, price, and volume[1] to send small slices of the order (child orders) out to the market over time. They were developed so that traders do not need to constantly watch a stock and repeatedly send those slices out manually. Popular “algos” include Percentage of Volume, Pegged, VWAP, TWAP, Implementation Shortfall, Target Close. In the twenty-first century, algorithmic trading has been gaining traction with both retails and institutional traders. Algorithmic trading is not an attempt to make a trading profit. It is simply a way to minimize the cost, market impact and risk in execution of an order.[2][3] It is widely used by investment banks, pension funds, mutual funds, and hedge funds because these institutional traders need to execute large orders in markets that cannot support all of the size at once.

The term is also used to mean automated trading system. These do indeed have the goal of making a profit. Also known as black box trading, these encompass trading strategies that are heavily reliant on complex mathematical formulas and high-speed computer programs.[4][5]

Such systems run strategies including market making, inter-market spreading, arbitrage, or pure speculation such as trend following. Many fall into the category of high-frequency trading (HFT), which are characterized by high turnover and high order-to-trade ratios.[6] As a result, in February 2012, the Commodity Futures Trading Commission (CFTC) formed a special working group that included academics and industry experts to advise the CFTC on how best to define HFT.[7][8] HFT strategies utilize computers that make elaborate decisions to initiate orders based on information that is received electronically, before human traders are capable of processing the information they observe. Algorithmic trading and HFT have resulted in a dramatic change of the market microstructure, particularly in the way liquidity is provided.[9]

Emblematic examples

Profitability projections by the TABB Group, a financial services industry research firm, for the US equities HFT industry were US$1.3 billion before expenses for 2014,[10] significantly down on the maximum of US$21 billion that the 300 securities firms and hedge funds that then specialized in this type of trading took in profits in 2008,[11] which the authors had then called “relatively small” and “surprisingly modest” when compared to the market’s overall trading volume. In March 2014, Virtu Financial, a high-frequency trading firm, reported that during five years the firm as a whole was profitable on 1,277 out of 1,278 trading days,[12] losing money just one day, empirically demonstrating the law of large numbers benefit of trading thousands to millions of tiny, low-risk and low-edge trades every trading day.[13]

Algorithmic trading. Percentage of market volume.

[14]

A third of all European Union and United States stock trades in 2006 were driven by automatic programs, or algorithms.[15] As of 2009, studies suggested HFT firms accounted for 60–73% of all US equity trading volume, with that number falling to approximately 50% in 2012.[16][17] In 2006, at the London Stock Exchange, over 40% of all orders were entered by algorithmic traders, with 60% predicted for 2007. American markets and European markets generally have a higher proportion of algorithmic trades than other markets, and estimates for 2008 range as high as an 80% proportion in some markets. Foreign exchange markets also have active algorithmic trading (about 25% of orders in 2006).[18] Futures markets are considered fairly easy to integrate into algorithmic trading,[19] with about 20% of options volume expected to be computer-generated by 2010.[needs update][20] Bond markets are moving toward more access to algorithmic traders.[21]

Algorithmic trading and HFT have been the subject of much public debate since the U.S. Securities and Exchange Commission and the Commodity Futures Trading Commission said in reports that an algorithmic trade entered by a mutual fund company triggered a wave of selling that led to the 2010 Flash Crash.[22][23][24][25][26][27][28][29] The same reports found HFT strategies may have contributed to subsequent volatility by rapidly pulling liquidity from the market. As a result of these events, the Dow Jones Industrial Average suffered its second largest intraday point swing ever to that date, though prices quickly recovered. (See List of largest daily changes in the Dow Jones Industrial Average.) A July, 2011 report by the International Organization of Securities Commissions (IOSCO), an international body of securities regulators, concluded that while “algorithms and HFT technology have been used by market participants to manage their trading and risk, their usage was also clearly a contributing factor in the flash crash event of May 6, 2010.”[30][31] However, other researchers have reached a different conclusion. One 2010 study found that HFT did not significantly alter trading inventory during the Flash Crash.[32] Some algorithmic trading ahead of index fund rebalancing transfers profits from investors.[33][34][35]

History

Computerization of the order flow in financial markets began in the early 1970s, with some landmarks being the introduction of the New York Stock Exchange‘s “designated order turnaround” system (DOT, and later SuperDOT), which routed orders electronically to the proper trading post, which executed them manually. The “opening automated reporting system” (OARS) aided the specialist in determining the market clearing opening price (SOR; Smart Order Routing).

Program trading is defined by the New York Stock Exchange as an order to buy or sell 15 or more stocks valued at over US$1 million total. In practice this means that all program trades are entered with the aid of a computer. In the 1980s, program trading became widely used in trading between the S&P 500 equity and futures markets.

In stock index arbitrage a trader buys (or sells) a stock index futures contract such as the S&P 500 futures and sells (or buys) a portfolio of up to 500 stocks (can be a much smaller representative subset) at the NYSE matched against the futures trade. The program trade at the NYSE would be pre-programmed into a computer to enter the order automatically into the NYSE’s electronic order routing system at a time when the futures price and the stock index were far enough apart to make a profit.

At about the same time portfolio insurance was designed to create a synthetic put option on a stock portfolio by dynamically trading stock index futures according to a computer model based on the Black–Scholes option pricing model.

Both strategies, often simply lumped together as “program trading”, were blamed by many people (for example by the Brady report) for exacerbating or even starting the 1987 stock market crash. Yet the impact of computer driven trading on stock market crashes is unclear and widely discussed in the academic community.[36]

Financial markets with fully electronic execution and similar electronic communication networks developed in the late 1980s and 1990s. In the U.S., decimalization, which changed the minimum tick size from 1/16 of a dollar (US$0.0625) to US$0.01 per share in 2001[37], may have encouraged algorithmic trading as it changed the market microstructure by permitting smaller differences between the bid and offer prices, decreasing the market-makers’ trading advantage, thus increasing market liquidity.

This increased market liquidity led to institutional traders splitting up orders according to computer algorithms so they could execute orders at a better average price. These average price benchmarks are measured and calculated by computers by applying the time-weighted average price or more usually by the volume-weighted average price.

It is over. The trading that existed down the centuries has died. We have an electronic market today. It is the present. It is the future.

Robert Greifeld, NASDAQ CEO, April 2011[38]

A further encouragement for the adoption of algorithmic trading in the financial markets came in 2001 when a team of IBM researchers published a paper[39] at the International Joint Conference on Artificial Intelligence where they showed that in experimental laboratory versions of the electronic auctions used in the financial markets, two algorithmic strategies (IBM’s own MGD, and Hewlett-Packard‘s ZIP) could consistently out-perform human traders. MGD was a modified version of the “GD” algorithm invented by Steven Gjerstad & John Dickhaut in 1996/7;[40] the ZIP algorithm had been invented at HP by Dave Cliff (professor) in 1996.[41] In their paper, the IBM team wrote that the financial impact of their results showing MGD and ZIP outperforming human traders “…might be measured in billions of dollars annually”; the IBM paper generated international media coverage.

As more electronic markets opened, other algorithmic trading strategies were introduced. These strategies are more easily implemented by computers, because machines can react more rapidly to temporary mispricing and examine prices from several markets simultaneously. For example, Chameleon (developed by BNP Paribas), Stealth[42] (developed by the Deutsche Bank), Sniper and Guerilla (developed by Credit Suisse[43]), arbitrage, statistical arbitrage, trend following, and mean reversion.

This type of trading is what is driving the new demand for low latency proximity hosting and global exchange connectivity. It is imperative to understand what latency is when putting together a strategy for electronic trading. Latency refers to the delay between the transmission of information from a source and the reception of the information at a destination. Latency is, as a lower bound, determined by the speed of light; this corresponds to about 3.3 milliseconds per 1,000 kilometers of optical fiber. Any signal regenerating or routing equipment introduces greater latency than this lightspeed baseline.

Strategies

Trading ahead of index fund rebalancing

Most retirement savings, such as private pension funds or 401(k) and individual retirement accounts in the US, are invested in mutual funds, the most popular of which are index funds which must periodically “rebalance” or adjust their portfolio to match the new prices and market capitalization of the underlying securities in the stock or other index that they track.[44][45] Profits are transferred from passive index investors to active investors, some of whom are algorithmic traders specifically exploiting the index rebalance effect. The magnitude of these losses incurred by passive investors has been estimated at 21-28bp per year for the S&P 500 and 38-77bp per year for the Russell 2000.[34] John Montgomery of Bridgeway Capital Management says that the resulting “poor investor returns” from trading ahead of mutual funds is “the elephant in the room” that “shockingly, people are not talking about.”[35]

Pairs trading

Pairs trading or pair trading is a long-short, ideally market-neutral strategy enabling traders to profit from transient discrepancies in relative value of close substitutes. Unlike in the case of classic arbitrage, in case of pairs trading, the law of one price cannot guarantee convergence of prices. This is especially true when the strategy is applied to individual stocks – these imperfect substitutes can in fact diverge indefinitely. In theory the long-short nature of the strategy should make it work regardless of the stock market direction. In practice, execution risk, persistent and large divergences, as well as a decline in volatility can make this strategy unprofitable for long periods of time (e.g. 2004-7). It belongs to wider categories of statistical arbitrage, convergence trading, and relative value strategies.[46]

Delta-neutral strategies

In finance, delta-neutral describes a portfolio of related financial securities, in which the portfolio value remains unchanged due to small changes in the value of the underlying security. Such a portfolio typically contains options and their corresponding underlying securities such that positive and negative delta components offset, resulting in the portfolio’s value being relatively insensitive to changes in the value of the underlying security.

Arbitrage

In economics and finance, arbitrage is the practice of taking advantage of a price difference between two or more markets: striking a combination of matching deals that capitalize upon the imbalance, the profit being the difference between the market prices. When used by academics, an arbitrage is a transaction that involves no negative cash flow at any probabilistic or temporal state and a positive cash flow in at least one state; in simple terms, it is the possibility of a risk-free profit at zero cost. Example: One of the most popular Arbitrage trading opportunities is played with the S&P futures and the S&P 500 stocks. During most trading days these two will develop disparity in the pricing between the two of them. This happens when the price of the stocks which are mostly traded on the NYSE and NASDAQ markets either get ahead or behind the S&P Futures which are traded in the CME market.

Conditions for arbitrage

Arbitrage is possible when one of three conditions is met:

- The same asset does not trade at the same price on all markets (the “law of one price” is temporarily violated).

- Two assets with identical cash flows do not trade at the same price.

- An asset with a known price in the future does not today trade at its future price discounted at the risk-free interest rate (or, the asset does not have negligible costs of storage; as such, for example, this condition holds for grain but not for securities).

Arbitrage is not simply the act of buying a product in one market and selling it in another for a higher price at some later time. The long and short transactions should ideally occur simultaneously to minimize the exposure to market risk, or the risk that prices may change on one market before both transactions are complete. In practical terms, this is generally only possible with securities and financial products which can be traded electronically, and even then, when first leg(s) of the trade is executed, the prices in the other legs may have worsened, locking in a guaranteed loss. Missing one of the legs of the trade (and subsequently having to open it at a worse price) is called ‘execution risk’ or more specifically ‘leg-in and leg-out risk’.[a]

In the simplest example, any good sold in one market should sell for the same price in another. Traders may, for example, find that the price of wheat is lower in agricultural regions than in cities, purchase the good, and transport it to another region to sell at a higher price. This type of price arbitrage is the most common, but this simple example ignores the cost of transport, storage, risk, and other factors. “True” arbitrage requires that there be no market risk involved. Where securities are traded on more than one exchange, arbitrage occurs by simultaneously buying in one and selling on the other. Such simultaneous execution, if perfect substitutes are involved, minimizes capital requirements, but in practice never creates a “self-financing” (free) position, as many sources incorrectly assume following the theory. As long as there is some difference in the market value and riskiness of the two legs, capital would have to be put up in order to carry the long-short arbitrage position.

Mean reversion

Mean reversion is a mathematical methodology sometimes used for stock investing, but it can be applied to other processes. In general terms the idea is that both a stock’s high and low prices are temporary, and that a stock’s price tends to have an average price over time. An example of a mean-reverting process is the Ornstein-Uhlenbeck stochastic equation.

Mean reversion involves first identifying the trading range for a stock, and then computing the average price using analytical techniques as it relates to assets, earnings, etc.

When the current market price is less than the average price, the stock is considered attractive for purchase, with the expectation that the price will rise. When the current market price is above the average price, the market price is expected to fall. In other words, deviations from the average price are expected to revert to the average.

The standard deviation of the most recent prices (e.g., the last 20) is often used as a buy or sell indicator.

Stock reporting services (such as Yahoo! Finance, MS Investor, Morningstar, etc.), commonly offer moving averages for periods such as 50 and 100 days. While reporting services provide the averages, identifying the high and low prices for the study period is still necessary.

Scalping

Scalping is liquidity provision by non-traditional market makers, whereby traders attempt to earn (or make) the bid-ask spread. This procedure allows for profit for so long as price moves are less than this spread and normally involves establishing and liquidating a position quickly, usually within minutes or less.

A market maker is basically a specialized scalper. The volume a market maker trades is many times more than the average individual scalper and would make use of more sophisticated trading systems and technology. However, registered market makers are bound by exchange rules stipulating their minimum quote obligations. For instance, NASDAQ requires each market maker to post at least one bid and one ask at some price level, so as to maintain a two-sided market for each stock represented.

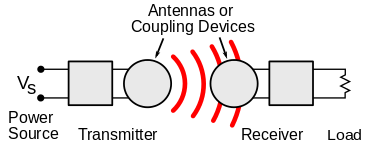

Transaction cost reduction

Most strategies referred to as algorithmic trading (as well as algorithmic liquidity-seeking) fall into the cost-reduction category. The basic idea is to break down a large order into small orders and place them in the market over time. The choice of algorithm depends on various factors, with the most important being volatility and liquidity of the stock. For example, for a highly liquid stock, matching a certain percentage of the overall orders of stock (called volume inline algorithms) is usually a good strategy, but for a highly illiquid stock, algorithms try to match every order that has a favorable price (called liquidity-seeking algorithms).

The success of these strategies is usually measured by comparing the average price at which the entire order was executed with the average price achieved through a benchmark execution for the same duration. Usually, the volume-weighted average price is used as the benchmark. At times, the execution price is also compared with the price of the instrument at the time of placing the order.

A special class of these algorithms attempts to detect algorithmic or iceberg orders on the other side (i.e. if you are trying to buy, the algorithm will try to detect orders for the sell side). These algorithms are called sniffing algorithms. A typical example is “Stealth.”

Some examples of algorithms are TWAP, VWAP, Implementation shortfall, POV, Display size, Liquidity seeker, and Stealth. Modern algorithms are often optimally constructed via either static or dynamic programming .[47] [48] [49]

Strategies that only pertain to dark pools

Recently, HFT, which comprises a broad set of buy-side as well as market making sell side traders, has become more prominent and controversial.[50] These algorithms or techniques are commonly given names such as “Stealth” (developed by the Deutsche Bank), “Iceberg”, “Dagger”, “Guerrilla”, “Sniper”, “BASOR” (developed by Quod Financial) and “Sniffer”.[51] Dark pools are alternative trading systems that are private in nature—and thus do not interact with public order flow—and seek instead to provide undisplayed liquidity to large blocks of securities.[52] In dark pools trading takes place anonymously, with most orders hidden or “iceberged.”[53] Gamers or “sharks” sniff out large orders by “pinging” small market orders to buy and sell. When several small orders are filled the sharks may have discovered the presence of a large iceberged order.

“Now it’s an arms race,” said Andrew Lo, director of the Massachusetts Institute of Technology’s Laboratory for Financial Engineering. “Everyone is building more sophisticated algorithms, and the more competition exists, the smaller the profits.”[54]

Market timing

Strategies designed to generate alpha are considered market timing strategies. These types of strategies are designed using a methodology that includes backtesting, forward testing and live testing. Market timing algorithms will typically use technical indicators such as moving averages but can also include pattern recognition logic implemented using Finite State Machines.

Backtesting the algorithm is typically the first stage and involves simulating the hypothetical trades through an in-sample data period. Optimization is performed in order to determine the most optimal inputs. Steps taken to reduce the chance of over optimization can include modifying the inputs +/- 10%, schmooing the inputs in large steps, running monte carlo simulations and ensuring slippage and commission is accounted for.[55]

Forward testing the algorithm is the next stage and involves running the algorithm through an out of sample data set to ensure the algorithm performs within backtested expectations.

Live testing is the final stage of development and requires the developer to compare actual live trades with both the backtested and forward tested models. Metrics compared include percent profitable, profit factor, maximum drawdown and average gain per trade.

High-frequency trading

As noted above, high-frequency trading (HFT) is a form of algorithmic trading characterized by high turnover and high order-to-trade ratios. Although there is no single definition of HFT, among its key attributes are highly sophisticated algorithms, specialized order types, co-location, very short-term investment horizons, and high cancellation rates for orders.[6] In the U.S., high-frequency trading (HFT) firms represent 2% of the approximately 20,000 firms operating today, but account for 73% of all equity trading volume.[citation needed] As of the first quarter in 2009, total assets under management for hedge funds with HFT strategies were US$141 billion, down about 21% from their high.[56] The HFT strategy was first made successful by Renaissance Technologies.[57] High-frequency funds started to become especially popular in 2007 and 2008.[57] Many HFT firms are market makers and provide liquidity to the market, which has lowered volatility and helped narrow Bid-offer spreads making trading and investing cheaper for other market participants.[56][58][59] HFT has been a subject of intense public focus since the U.S. Securities and Exchange Commission and the Commodity Futures Trading Commission stated that both algorithmic trading and HFT contributed to volatility in the 2010 Flash Crash. Among the major U.S. high frequency trading firms are Chicago Trading, Virtu Financial, Timber Hill, ATD, GETCO, and Citadel LLC.[60]

There are four key categories of HFT strategies: market-making based on order flow, market-making based on tick data information, event arbitrage and statistical arbitrage. All portfolio-allocation decisions are made by computerized quantitative models. The success of computerized strategies is largely driven by their ability to simultaneously process volumes of information, something ordinary human traders cannot do.

Market making

Market making involves placing a limit order to sell (or offer) above the current market price or a buy limit order (or bid) below the current price on a regular and continuous basis to capture the bid-ask spread. Automated Trading Desk, which was bought by Citigroup in July 2007, has been an active market maker, accounting for about 6% of total volume on both NASDAQ and the New York Stock Exchange.[61]

Statistical arbitrage

Another set of HFT strategies in classical arbitrage strategy might involve several securities such as covered interest rate parity in the foreign exchange market which gives a relation between the prices of a domestic bond, a bond denominated in a foreign currency, the spot price of the currency, and the price of a forward contract on the currency. If the market prices are sufficiently different from those implied in the model to cover transaction cost then four transactions can be made to guarantee a risk-free profit. HFT allows similar arbitrages using models of greater complexity involving many more than 4 securities. The TABB Group estimates that annual aggregate profits of low latency arbitrage strategies currently exceed US$21 billion.[16]

A wide range of statistical arbitrage strategies have been developed whereby trading decisions are made on the basis of deviations from statistically significant relationships. Like market-making strategies, statistical arbitrage can be applied in all asset classes.

Event arbitrage

A subset of risk, merger, convertible, or distressed securities arbitrage that counts on a specific event, such as a contract signing, regulatory approval, judicial decision, etc., to change the price or rate relationship of two or more financial instruments and permit the arbitrageur to earn a profit.[62]

Merger arbitrage also called risk arbitrage would be an example of this. Merger arbitrage generally consists of buying the stock of a company that is the target of a takeover while shorting the stock of the acquiring company. Usually the market price of the target company is less than the price offered by the acquiring company. The spread between these two prices depends mainly on the probability and the timing of the takeover being completed as well as the prevailing level of interest rates. The bet in a merger arbitrage is that such a spread will eventually be zero, if and when the takeover is completed. The risk is that the deal “breaks” and the spread massively widens.

Spoofing

One strategy that some traders have employed, which has been proscribed yet likely continues, is called spoofing. It is the act of placing orders to give the impression of wanting to buy or sell shares, without ever having the intention of letting the order execute to temporarily manipulate the market to buy or sell shares at a more favorable price. This is done by creating limit orders outside the current bid or ask price to change the reported price to other market participants. The trader can subsequently place trades based on the artificial change in price, then canceling the limit orders before they are executed.

Suppose a trader desires to sell shares of a company with a current bid of $20 and a current ask of $20.20. The trader would place a buy order at $20.10, still some distance from the ask so it will not be executed, and the $20.10 bid is reported as the National Best Bid and Offer best bid price. The trader then executes a market order for the sale of the shares they wished to sell. Because the best bid price is the investor’s artificial bid, a market maker fills the sale order at $20.10, allowing for a $.10 higher sale price per share. The trader subsequently cancels their limit order on the purchase he never had the intention of completing.

Quote stuffing

Quote stuffing is a tactic employed by malicious traders that involves quickly entering and withdrawing large quantities of orders in an attempt to flood the market, thereby gaining an advantage over slower market participants.[63] The rapidly placed and canceled orders cause market data feeds that ordinary investors rely on to delay price quotes while the stuffing is occurring. HFT firms benefit from proprietary, higher-capacity feeds and the most capable, lowest latency infrastructure. Researchers showed high-frequency traders are able to profit by the artificially induced latencies and arbitrage opportunities that result from quote stuffing.[64]

Low latency trading systems

Network-induced latency, a synonym for delay, measured in one-way delay or round-trip time, is normally defined as how much time it takes for a data packet to travel from one point to another.[65] Low latency trading refers to the algorithmic trading systems and network routes used by financial institutions connecting to stock exchanges and electronic communication networks (ECNs) to rapidly execute financial transactions.[66] Most HFT firms depend on low latency execution of their trading strategies. Joel Hasbrouck and Gideon Saar (2013) measure latency based on three components: the time it takes for 1) information to reach the trader, 2) the trader’s algorithms to analyze the information, and 3) the generated action to reach the exchange and get implemented.[67] In a contemporary electronic market (circa 2009), low latency trade processing time was qualified as under 10 milliseconds, and ultra-low latency as under 1 millisecond.[68]

Low-latency traders depend on ultra-low latency networks. They profit by providing information, such as competing bids and offers, to their algorithms microseconds faster than their competitors.[16] The revolutionary advance in speed has led to the need for firms to have a real-time, colocated trading platform to benefit from implementing high-frequency strategies.[16] Strategies are constantly altered to reflect the subtle changes in the market as well as to combat the threat of the strategy being reverse engineered by competitors. This is due to the evolutionary nature of algorithmic trading strategies – they must be able to adapt and trade intelligently, regardless of market conditions, which involves being flexible enough to withstand a vast array of market scenarios. As a result, a significant proportion of net revenue from firms is spent on the R&D of these autonomous trading systems.[16]

Strategy implementation

Most of the algorithmic strategies are implemented using modern programming languages, although some still implement strategies designed in spreadsheets. Increasingly, the algorithms used by large brokerages and asset managers are written to the FIX Protocol’s Algorithmic Trading Definition Language (FIXatdl), which allows firms receiving orders to specify exactly how their electronic orders should be expressed. Orders built using FIXatdl can then be transmitted from traders’ systems via the FIX Protocol.[69] Basic models can rely on as little as a linear regression, while more complex game-theoretic and pattern recognition[70] or predictive models can also be used to initiate trading. More complex methods such as Markov Chain Monte Carlo have been used to create these models.[citation needed]

Issues and developments

Algorithmic trading has been shown to substantially improve market liquidity[71] among other benefits. However, improvements in productivity brought by algorithmic trading have been opposed by human brokers and traders facing stiff competition from computers.

Cyborg finance

Technological advances in finance, particularly those relating to algorithmic trading, has increased financial speed, connectivity, reach, and complexity while simultaneously reducing its humanity. Computers running software based on complex algorithms have replaced humans in many functions in the financial industry. Finance is essentially becoming an industry where machines and humans share the dominant roles – transforming modern finance into what one scholar has called, “cyborg finance.”[72]

Concerns

While many experts laud the benefits of innovation in computerized algorithmic trading, other analysts have expressed concern with specific aspects of computerized trading.

“The downside with these systems is their black box-ness,” Mr. Williams said. “Traders have intuitive senses of how the world works. But with these systems you pour in a bunch of numbers, and something comes out the other end, and it’s not always intuitive or clear why the black box latched onto certain data or relationships.” [54]

“The Financial Services Authority has been keeping a watchful eye on the development of black box trading. In its annual report the regulator remarked on the great benefits of efficiency that new technology is bringing to the market. But it also pointed out that ‘greater reliance on sophisticated technology and modelling brings with it a greater risk that systems failure can result in business interruption’.” [73]

UK Treasury minister Lord Myners has warned that companies could become the “playthings” of speculators because of automatic high-frequency trading. Lord Myners said the process risked destroying the relationship between an investor and a company.[74]

Other issues include the technical problem of latency or the delay in getting quotes to traders,[75] security and the possibility of a complete system breakdown leading to a market crash.[76]

“Goldman spends tens of millions of dollars on this stuff. They have more people working in their technology area than people on the trading desk…The nature of the markets has changed dramatically.” [77]

On August 1, 2012 Knight Capital Group experienced a technology issue in their automated trading system,[78] causing a loss of $440 million.

This issue was related to Knight’s installation of trading software and resulted in Knight sending numerous erroneous orders in NYSE-listed securities into the market. This software has been removed from the company’s systems. [..] Clients were not negatively affected by the erroneous orders, and the software issue was limited to the routing of certain listed stocks to NYSE. Knight has traded out of its entire erroneous trade position, which has resulted in a realized pre-tax loss of approximately $440 million.

Algorithmic and high-frequency trading were shown to have contributed to volatility during the May 6, 2010 Flash Crash,[22][24] when the Dow Jones Industrial Average plunged about 600 points only to recover those losses within minutes. At the time, it was the second largest point swing, 1,010.14 points, and the biggest one-day point decline, 998.5 points, on an intraday basis in Dow Jones Industrial Average history.[79]

Recent developments

Financial market news is now being formatted by firms such as Need To Know News, Thomson Reuters, Dow Jones, and Bloomberg, to be read and traded on via algorithms.

“Computers are now being used to generate news stories about company earnings results or economic statistics as they are released. And this almost instantaneous information forms a direct feed into other computers which trade on the news.”[80]

The algorithms do not simply trade on simple news stories but also interpret more difficult to understand news. Some firms are also attempting to automatically assign sentiment (deciding if the news is good or bad) to news stories so that automated trading can work directly on the news story.[81]

“Increasingly, people are looking at all forms of news and building their own indicators around it in a semi-structured way,” as they constantly seek out new trading advantages said Rob Passarella, global director of strategy at Dow Jones Enterprise Media Group. His firm provides both a low latency news feed and news analytics for traders. Passarella also pointed to new academic research being conducted on the degree to which frequent Google searches on various stocks can serve as trading indicators, the potential impact of various phrases and words that may appear in Securities and Exchange Commission statements and the latest wave of online communities devoted to stock trading topics.[81]

“Markets are by their very nature conversations, having grown out of coffee houses and taverns,” he said. So the way conversations get created in a digital society will be used to convert news into trades, as well, Passarella said.[81]

“There is a real interest in moving the process of interpreting news from the humans to the machines” says Kirsti Suutari, global business manager of algorithmic trading at Reuters. “More of our customers are finding ways to use news content to make money.”[80]

An example of the importance of news reporting speed to algorithmic traders was an advertising campaign by Dow Jones (appearances included page W15 of the Wall Street Journal, on March 1, 2008) claiming that their service had beaten other news services by two seconds in reporting an interest rate cut by the Bank of England.

In July 2007, Citigroup, which had already developed its own trading algorithms, paid $680 million for Automated Trading Desk, a 19-year-old firm that trades about 200 million shares a day.[82] Citigroup had previously bought Lava Trading and OnTrade Inc.

In late 2010, The UK Government Office for Science initiated a Foresight project investigating the future of computer trading in the financial markets,[83] led by Dame Clara Furse, ex-CEO of the London Stock Exchange and in September 2011 the project published its initial findings in the form of a three-chapter working paper available in three languages, along with 16 additional papers that provide supporting evidence.[84] All of these findings are authored or co-authored by leading academics and practitioners, and were subjected to anonymous peer-review. Released in 2012, the Foresight study acknowledged issues related to periodic illiquidity, new forms of manipulation and potential threats to market stability due to errant algorithms or excessive message traffic. However, the report was also criticized for adopting “standard pro-HFT arguments” and advisory panel members being linked to the HFT industry.[85]

System architecture

A traditional trading system consists of primarily of two blocks – one that receives the market data while the other that sends the order request to the exchange. However, an algorithmic trading system can be broken down into three parts [86]

- Exchange

- The server

- Application

Traditional architecture of algorithmic trading systems

Exchange(s) provide data to the system, which typically consists of the latest order book, traded volumes, and last traded price (LTP) of scrip. The server in turn receives the data simultaneously acting as a store for historical database. The data is analyzed at the application side, where trading strategies are fed from the user and can be viewed on the GUI. Once the order is generated, it is sent to the order management system (OMS), which in turn transmits it to the exchange.

Gradually, old-school, high latency architecture of algorithmic systems is being replaced by newer, state-of-the-art, high infrastructure, low-latency networks. The complex event processing engine (CEP), which is the heart of decision making in algo-based trading systems, is used for order routing and risk management.

With the emergence of the FIX (Financial Information Exchange) protocol, the connection to different destinations has become easier and the go-to market time has reduced, when it comes to connecting with a new destination. With the standard protocol in place, integration of third-party vendors for data feeds is not cumbersome anymore.

Emergence of protocols in algorithmic trading

Effects

Though its development may have been prompted by decreasing trade sizes caused by decimalization, algorithmic trading has reduced trade sizes further. Jobs once done by human traders are being switched to computers. The speeds of computer connections, measured in milliseconds and even microseconds, have become very important.[87][88]

More fully automated markets such as NASDAQ, Direct Edge and BATS (formerly an acronym for Better Alternative Trading System) in the US, have gained market share from less automated markets such as the NYSE. Economies of scale in electronic trading have contributed to lowering commissions and trade processing fees, and contributed to international mergers and consolidation of financial exchanges.

Competition is developing among exchanges for the fastest processing times for completing trades. For example, in June 2007, the London Stock Exchange launched a new system called TradElect that promises an average 10 millisecond turnaround time from placing an order to final confirmation and can process 3,000 orders per second.[89] Since then, competitive exchanges have continued to reduce latency with turnaround times of 3 milliseconds available. This is of great importance to high-frequency traders, because they have to attempt to pinpoint the consistent and probable performance ranges of given financial instruments. These professionals are often dealing in versions of stock index funds like the E-mini S&Ps, because they seek consistency and risk-mitigation along with top performance. They must filter market data to work into their software programming so that there is the lowest latency and highest liquidity at the time for placing stop-losses and/or taking profits. With high volatility in these markets, this becomes a complex and potentially nerve-wracking endeavor, where a small mistake can lead to a large loss. Absolute frequency data play into the development of the trader’s pre-programmed instructions.[90]

In the U.S., spending on computers and software in the financial industry increased to $26.4 billion in 2005.[2][91]

Algorithmic trading has caused a shift in the types of employees working in the financial industry. For example, many physicists have entered the financial industry as quantitative analysts. Some physicists have even begun to do research in economics as part of doctoral research. This interdisciplinary movement is sometimes called econophysics.[92] Some researchers also cite a “cultural divide” between employees of firms primarily engaged in algorithmic trading and traditional investment managers. Algorithmic trading has encouraged an increased focus on data and had decreased emphasis on sell-side research.[93]

Communication standards

Algorithmic trades require communicating considerably more parameters than traditional market and limit orders. A trader on one end (the “buy side“) must enable their trading system (often called an “order management system” or “execution management system“) to understand a constantly proliferating flow of new algorithmic order types. The R&D and other costs to construct complex new algorithmic orders types, along with the execution infrastructure, and marketing costs to distribute them, are fairly substantial. What was needed was a way that marketers (the “sell side“) could express algo orders electronically such that buy-side traders could just drop the new order types into their system and be ready to trade them without constant coding custom new order entry screens each time.

FIX Protocol is a trade association that publishes free, open standards in the securities trading area. The FIX language was originally created by Fidelity Investments, and the association Members include virtually all large and many midsized and smaller broker dealers, money center banks, institutional investors, mutual funds, etc. This institution dominates standard setting in the pretrade and trade areas of security transactions. In 2006–2007 several members got together and published a draft XML standard for expressing algorithmic order types. The standard is called FIX Algorithmic Trading Definition Language (FIXatdl).[94]

See also

Notes

- As an arbitrage consists of at least two trades, the metaphor is of putting on a pair of pants, one leg (trade) at a time. The risk that one trade (leg) fails to execute is thus ‘leg risk’.

References

- The New Investor, UCLA Law Review, available at: http://ssrn.com/abstract=2227498

- “Business and finance”. The Economist.

- “All Reports”.

- The New Financial Industry, Alabama Law Review, available at: http://ssrn.com/abstract=2417988

- Lemke and Lins, “Soft Dollars and Other Trading Activities,” § 2:30 (Thomson West, 2015–2016 ed.).

- Lemke and Lins, “Soft Dollars and Other Trading Activities,” § 2:31 (Thomson West, 2015–2016 ed.).

- Silla Brush (June 20, 2012). “CFTC Panel Urges Broad Definition of High-Frequency Trading”. Bloomberg.com.

- Futures Trading Commission Votes to Establish a New Subcommittee of the Technology Advisory Committee (TAC) to focus on High Frequency Trading, February 9, 2012, Commodity Futures Trading Commission

- “Easley, D., M. López de Prado, M. O’Hara: The Microstructure of the ‘Flash Crash’: Flow Toxicity, Liquidity Crashes and the Probability of Informed Trading”, The Journal of Portfolio Management, Vol. 37, No. 2, pp. 118–128, Winter, 2011, SSRN 1695041

- FT.com (3 April 2014). “Fierce competition forces ‘flash’ HFT firms into new markets”.

- Opalesque (4 August 2009). “Opalesque Exclusive: High-frequency trading under the microscope”.

- Virtu Financial Form S-1, available at https://www.sec.gov/Archives/edgar/data/1592386/000104746914002070/a2218589zs-1.htm

- Laughlin, G. Insights into High Frequency Trading from the Virtu Financial IPO WSJ.com Retrieved 22 May 2015.

- Morton Glantz, Robert Kissell. Multi-Asset Risk Modeling: Techniques for a Global Economy in an Electronic and Algorithmic Trading Era. Academic Press, Dec 3, 2013, p. 258.

- “Aite Group”.

- Rob Iati, The Real Story of Trading Software Espionage Archived July 7, 2011, at the Wayback Machine., AdvancedTrading.com, July 10, 2009

- Times Topics: High-Frequency Trading, The New York Times, December 20, 2012

- A London Hedge Fund That Opts for Engineers, Not M.B.A.’s by Heather Timmons, August 18, 2006

- “Business and finance”. The Economist.

- “Algorithmic trading, Ahead of the tape”, The Economist, 383 (June 23, 2007), p. 85, June 21, 2007

- “MTS to mull bond access”, The Wall Street Journal Europe, p. 21, April 18, 2007

- Lauricella, Tom (2 Oct 2010). “How a Trading Algorithm Went Awry”. The Wall Street Journal.

- Mehta, Nina (1 Oct 2010). “Automatic Futures Trade Drove May Stock Crash, Report Says”. Bloomberg L.P.

- Bowley, Graham (1 Oct 2010). “Lone $4.1 Billion Sale Led to ‘Flash Crash’ in May”. The New York Times.

- Spicer, Jonathan (1 Oct 2010). “Single U.S. trade helped spark May’s flash crash”. Reuters.

- Goldfarb, Zachary (1 Oct 2010). “Report examines May’s ‘flash crash,’ expresses concern over high-speed trading”. Washington Post.

- Popper, Nathaniel (1 Oct 2010). “$4.1-billion trade set off Wall Street ‘flash crash,’ report finds”. Los Angeles Times.

- Younglai, Rachelle (5 Oct 2010). “U.S. probes computer algorithms after “flash crash““. Reuters.

- Spicer, Jonathan (15 Oct 2010). “Special report: Globally, the flash crash is no flash in the pan”. Reuters.

- TECHNICAL COMMITTEE OF THE INTERNATIONAL ORGANIZATION OF SECURITIES COMMISSIONS (July 2011), “Regulatory Issues Raised by the Impact of Technological Changes on Market Integrity and Efficiency” (PDF), IOSCO Technical Committee, retrieved July 12, 2011

- Huw Jones (July 7, 2011). “Ultra fast trading needs curbs -global regulators”. Reuters. Retrieved July 12, 2011.

- Kirilenko, Andrei; Kyle, Albert S.; Samadi, Mehrdad; Tuzun, Tugkan (May 5, 2014), The Flash Crash: The Impact of High Frequency Trading on an Electronic Market (PDF)

- Amery, Paul (November 11, 2010). “Know Your Enemy”. IndexUniverse.eu. Retrieved 26 March 2013.

- Petajisto, Antti (2011). “The index premium and its hidden cost for index funds” (PDF). Journal of Empirical Finance. 18 (2): 271–288. doi:10.1016/j.jempfin.2010.10.002. Retrieved 26 March 2013.

- Rekenthaler, John (February–March 2011). “The Weighting Game, and Other Puzzles of Indexing” (PDF). Morningstar Advisor. pp. 52–56 [56]. Archived from the original (PDF) on July 29, 2013. Retrieved 26 March 2013.

- Sornette (2003), Critical Market Crashes, archived from the original on May 3, 2010

- https://www.investopedia.com/ask/answers/why-nyse-switch-fractions-to-decimals/

- Bowley, Graham (April 25, 2011). “Preserving a Market Symbol”. The New York Times. Retrieved 7 August 2014.

- “Agent-Human Interactions in the Continuous Double Auction” (PDF), IBM T.J.Watson Research Center, August 2001

- Gjerstad, Steven; Dickhaut, John (January 1998), “Price Formation in Double Auctions, Games and Economic Behavior, 22(1):1–29″, S. Gjerstad and J. Dickhaut, 22, pp. 1–29, doi:10.1006/game.1997.0576

- “Minimal Intelligence Agents for Bargaining Behaviours in Market-Based Environments, Hewlett-Packard Laboratories Technical Report 97-91“, D. Cliff, August 1997

- Leshik, Edward; Cralle, Jane (2011). An Introduction to Algorithmic Trading: Basic to Advanced Strategies. West Sussex, UK: Wiley. p. 169. ISBN 978-0-470-68954-7.

- “Algo Arms Race Has a Leader – For Now”, NYU Stern School of Business, December 18, 2006

- “High-Frequency Firms Tripled Trades in Stock Rout, Wedbush Says”. Bloomberg/Financial Advisor. August 12, 2011. Retrieved 26 March 2013.

- Siedle, Ted (March 25, 2013). “Americans Want More Social Security, Not Less”. Forbes. Retrieved 26 March 2013.

- “The Application of Pairs Trading to Energy Futures Markets” (PDF).

- Jackie Shen (2013), A Pre-Trade Algorithmic Trading Model under Given Volume Measures and Generic Price Dynamics (GVM-GPD), available at SSRN or DOI.

- Jackie Shen and Yingjie Yu (2014), Styled Algorithmic Trading and the MV-MVP Style, available at SSRN.

- Jackie (Jianhong) Shen (2017), Hybrid IS-VWAP Dynamic Algorithmic Trading via LQR, available at SSRN.

- Wilmott, Paul (July 29, 2009). “Hurrying into the Next Panic”. The New York Times. p. A19. Retrieved July 29, 2009.

- “Trading with the help of ‘guerrillas’ and ‘snipers‘“ (PDF), Financial Times, March 19, 2007, archived from the original (PDF) on October 7, 2009

- Lemke and Lins, “Soft Dollars and Other Trading Activities,” § 2:29 (Thomson West, 2015–2016 ed.).

- Rob Curren, Watch Out for Sharks in Dark Pools, The Wall Street Journal, August 19, 2008, p. c5. Available at WSJ Blogs retrieved August 19, 2008

- Artificial intelligence applied heavily to picking stocks by Charles Duhigg, November 23, 2006

- “How To Build Robust Algorithmic Trading Strategies”. AlgorithmicTrading.net. Retrieved 2017-08-08.

- Geoffrey Rogow, Rise of the (Market) Machines, The Wall Street Journal, June 19, 2009

- “OlsenInvest – Scientific Investing” (PDF). Archived from the original (PDF) on February 25, 2012.

- Hendershott, Terrence, Charles M. Jones, and Albert J. Menkveld. (2010), “Does Algorithmic Trading Improve Liquidity?”, Journal of Finance, 66, pp. 1–33, doi:10.1111/j.1540-6261.2010.01624.x, SSRN 1100635

- “Jovanovic, Boyan, and Albert J. Menkveld. Middlemen in Securities Markets”, working paper, 2010, SSRN 1624329

- James E. Hollis (Sep 2013). “HFT: Boon? Or Impending Disaster?” (PDF). Cutter Associates. Retrieved July 1, 2014.

- “Citigroup to expand electronic trading capabilities by buying Automated Trading Desk”, The Associated Press, International Herald Tribune, July 2, 2007, retrieved July 4, 2007

- Event Arb Definition Amex.com, September 4th 2010

- “Quote Stuffing Definition”. Investopedia. Retrieved October 27, 2014.

- Diaz, David; Theodoulidis, Babis (January 10, 2012). “Financial Markets Monitoring and Surveillance: A Quote Stuffing Case Study”. SSRN 2193636.

- High-Speed Devices and Circuits with THz Applications by Jung Han Choi

- “Low Latency Trading”. Archived from the original on June 2, 2016. Retrieved April 26, 2015.

- “Low-Latency Trading”. SSRN 1695460.

- “Archived copy” (PDF). Archived from the original (PDF) on March 4, 2016. Retrieved April 26, 2015.

- FIXatdl – An Emerging Standard, FIXGlobal, December 2009

- Preis, T.; Paul, W.; Schneider, J. J. (2008), “Fluctuation patterns in high-frequency financial asset returns”, EPL, 82 (6): 68005, Bibcode:2008EL…..8268005P, doi:10.1209/0295-5075/82/68005

- “HENDERSHOTT, TERRENCE, CHARLES M. JONES, AND ALBERT J. MENKVELD. Does Algorithmic Trading Improve Liquidity?” (PDF), Journal of Finance, 2010, doi:10.1111/j.1540-6261.2010.01624.x, archived from the original (PDF) on July 16, 2010

- Lin, Tom C.W., The New Investor, 60 UCLA 678 (2013), available at: http://ssrn.com/abstract=2227498

- Black box traders are on the march The Telegraph, 27 August 2006

- Myners’ super-fast shares warning BBC News, Tuesday 3 November 2009.

- “FT.com / Search”.

- Cracking The Street’s New Math, Algorithmic trades are sweeping the stock market.

- The Associated Press, July 2, 2007 Citigroup to expand electronic trading capabilities by buying Automated Trading Desk, accessed July 4, 2007

- Knight Capital Group Provides Update Regarding August 1st Disruption To Routing In NYSE-listed Securities Archived August 4, 2012, at the Wayback Machine.

- [1] Lauricella, Tom, and McKay, Peter A. “Dow Takes a Harrowing 1,010.14-Point Trip,” Online Wall Street Journal, May 7, 2010. Retrieved May 9, 2010

- “City trusts computers to keep up with the news”. Financial Times.

- “Traders News”. Traders Magazine. Archived from the original on July 16, 2011.

- Siemon’s Case Study Automated Trading Desk, accessed July 4, 2007

- “Future of computer trading”.

- “Future of computer trading”.

- “U.K. Foresight Study Slammed For HFT ‘Bias‘“. Markets Media. October 30, 2012. Retrieved November 2, 2014.

- “Algorithmic Trading System Architecture”. QuantInsti. January 12, 2016.

- “Business and finance”. The Economist.

- “InformationWeek Authors – InformationWeek”. InformationWeek.

- “LSE leads race for quicker trades” by Alistair MacDonald The Wall Street Journal Europe, June 19, 2007, p.3

- “Milliseconds are focus in algorithmic trades”. Reuters. May 11, 2007.

- “Moving markets”. Retrieved 20 January 2015.

- Farmer, J. Done (November 1999). “Physicists attempt to scale the ivory towers of finance”. IEEE Xplore. 1: 26–39. arXiv:adap-org/9912002. doi:10.1109/5992.906615.

- Brown, Brian (2010). Chasing the Same Signals: How Black-Box Trading Influences Stock Markets from Wall Street to Shanghai. Singapore: John Wiley & Sons. ISBN 0-470-82488-3.

- [2] FIXatdl version 1.1 released March, 2010

Source: Algorithmic trading, https://en.wikipedia.org/w/index.php?title=Algorithmic_trading&oldid=871194564 (last visited Dec. 2, 2018).

Like this:

Like Loading...